Building CoinBet - Price Feed

30 minutes readThis is the second article in a series documenting how I’m building CoinBet. In the first post, we covered the basic idea of the app and set up the initial structure. Now, we’re wiring up the real-time price feed, optimizing updates, and observing useful metrics.

⚡ Heads up

This isn’t a LiveView tutorial. I’m assuming you already know your way around Elixir, Phoenix, and LiveView. The goal here is to document the journey, share real-world challenges, and hopefully spark some ideas; not to explain the basics.

Let’s get into it.

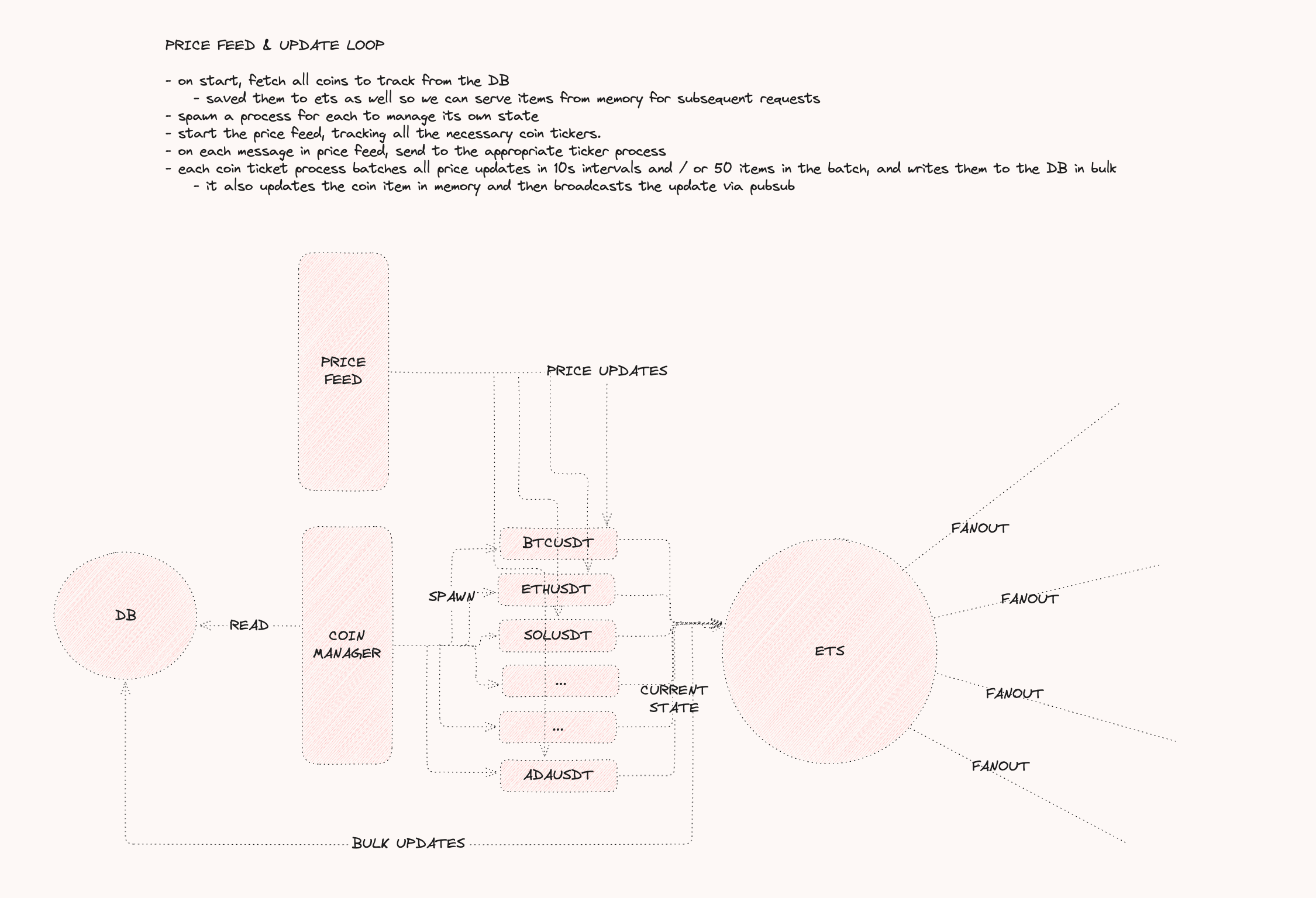

To kick things off, let’s walk through a high-level view of the data flow. When the system boots up, we load all supported coins from the database and spawn a long-lived process for each one. Since a single coin can have tons of trade actions flying in at any given moment but it’s not necessary (or smart) to save every one individually, we’ll do a little batching. Each GenServer will act as a buffer, collecting updates for 10 seconds before flushing them to the database in one batch.

Because we already have all the coin data in memory and we’re receiving real-time updates, we no longer need to load every coin from the database just to serve users. When a user lands on the site, the latest coin data will be pulled straight from an :ets table. (If you’re not familiar with :ets, it stands for Erlang Term Storage, basically like Redis, but built into the Erlang VM and can store any kind of Erlang data structure.)

For the live price feed, we’ll use Binance’s WebSocket API. It has some limits, but honestly, it works well and is surprisingly free, so no complaints. We’ll open a single connection to the feed, and for each incoming message, we route it to the relevant coin’s GenServer. After the message is handled, the new price gets saved into the :ets table, overwriting the previous one (since we only care about the latest info for the site).

We still persist all price movements to the database because we’ll need to match exact prices at specific timestamps later when handling payouts but that’s a story for another day. After caching the price, we broadcast a PubSub message with relevant metadata. This way, each CoinCard live_component can subscribe to updates for its coin directly from the cache.

High level overview of the price feed

High level overview of the price feed To get started with development, I set up two tables: coins and coin_prices. Of course, we need some initial coin data in the database. To make life easier both locally and when shipping to production, I added a list of 28 coins to a JSON file and inserted them through a migration. (using a migration means it runs once and only once.) The migration looks like this:

use Ecto.Migration

@path "#{:code.priv_dir(:coin_bet)}/static/coins.json"

def up do

coins =

@path

|> File.read!()

|> Jason.decode!()

|> Enum.map(fn coin ->

Map.merge(coin, %{

"inserted_at" => DateTime.utc_now(),

"updated_at" => DateTime.utc_now()

})

end)

CoinBet.Repo.insert_all("coins", coins, on_conflict: :nothing)

end

def down do

CoinBet.Repo.delete_all("coins")

end After that, I set up the price feed WebSocket client using the awesome WebSockex library. The client loads the coins, extracts their symbols, and subscribes to them all in a single connection. Once messages start rolling in, the plan above kicks in and it works nicely.

I’ve intentionally glossed over this part of the price feed for brevity and because it’s not the main focus of the article.

Live updates

In order to start receiving price changes as they arrive, we need to subscribe to updates for each coin. The simplest way to achieve this is to subscribe in the LiveView’s mount/3 callback since we’re already loading all the coins right there. Something like this:

def mount(_params, _session, socket) do

coins =

Ets.list_coins()

|> Enum.map(&to_coin_model/1)

|> Enum.filter(& &1.market_cap)

|> Enum.sort_by(& &1.market_cap, :desc)

if connected?(socket) do

Enum.each(coins, &CoinBet.Ets.subscribe_to_updates(&1.id))

end

{:ok, assign(socket, coins: coins)}

end The connected?/1 check is necessary because we only want to subscribe to updates after the LiveSocket is connected. LiveView invokes mount/3 twice when a page loads:

- First, during the regular HTTP request. At this point, it just returns static HTML.

- Then, it upgrades the connection from the client to a stateful one. This is where things become “live.”

There’s a pretty obvious flaw with this setup though: we’re subscribing the user to every single coin, even if they never actually see them. For 28 coins, it’s not a big deal, but as we add more to the list, it starts to hurt: wasted server resources, sending unnecessary bytes to the client, and it only gets worse as more users show up.

A better approach is to only subscribe to coins the user can see; coins inside the user’s viewport. Since this action needs to be triggered from the client side, I added a JS hook to each CoinCard. (If you remember from the first article, CoinCard is a live_component that wraps markup and events for each coin.)

Hooks.MaybeSubscribeToUpdates = {

mounted() {

const component = this.el.getAttribute("component");

this.observer = new IntersectionObserver(

([entry]) => {

const event = entry.isIntersecting ? "subscribe" : "unsubscribe";

this.pushEventTo(component, event);

},

{ root: null, threshold: 0.01 },

);

this.observer.observe(this.el);

},

destroyed() {

const component = this.el.getAttribute("component");

this.pushEventTo(component, "unsubscribe");

this.observer && this.observer.disconnect(this.el);

},

}; When the element mounts, we attach an IntersectionObserver to it. If even 1% of the element is visible (root: null uses the full viewport), we push a subscribe event to the component. If it leaves the viewport, we push an unsubscribe.

The component handles it like this:

def handle_event("subscribe", _params, socket) do

Ets.subscribe_to_updates(socket.assigns.coin.id)

{:noreply, socket}

end

def handle_event("unsubscribe", _params, socket) do

Ets.unsubscribe_from_updates(socket.assigns.coin.id)

{:noreply, socket}

endNow the user only gets updates for coins they’re looking at.

How does the CoinCard itself get the update? PubSub updates for a topic are sent as regular messages to the mailbox of the process that subscribed to it. But CoinCard is a live_component and live_component’s themselves are not processes, – they live inside a LiveView –. So we have to forward the updates manually to the component, using the built-in send_update/3 function.

# coin_live.ex

def handle_info(%{topic: :cache_update, payload: coin, table: :coins}, socket) do

CoinCard.push_update(coin.id, to_coin_model(coin))

{:noreply, socket}

end

# coin_card.ex

def push_update(coin_id, update) do

send_update(__MODULE__, id: "coin-card-#{coin_id}", coin: update)

endWe now have a constant stream of coin updates being broadcasted to clients. Not bad at all. One tiny improvement I made is to add a visual indicator that a coin price has changed. I installed the countup.js plugin and enabled it on the price element using a JS hook.

<div>

<p class="text-sm text-muted-foreground">Current Price</p>

<p class="text-xl font-bold" id={"coin-price-#{@coin_id}"} phx-hook="CountUpPrice">

{format_currency(@current_price, locale: @locale)}

</p>

</div>Hooks.CountUpPrice = {

mounted() {

this.lastValue = parseFloat(this.el.innerText.replace(/[^0-9.-]/g, "")) || 0;

this.formatter = currencyFormatter(window.locale, "USD");

},

updated() {

const newValue = parseFloat(this.el.innerText.replace(/[^0-9.-]/g, ""));

const countUp = new CountUp(this.el, newValue, {

startVal: this.lastValue,

duration: 1,

formattingFn: (value) => this.formatter.format(value),

decimalPlaces: 2,

});

if (!countUp.error) { countUp.start(); }

this.lastValue = newValue;

},

};Now on each update, there’s a smooth animation that counts up or down from the previous coin price to the current one. Pretty neat!

Summary:

- ✅ Only subscribe to visible coins

- ✅ Forward updates efficiently

- ✅ Animate price changes without killing performance

Now that updates are flying in, how heavy are they?

Live StatsBar

The first thing I was curious about was how much payloads would be sent over time for these price updates. LiveView has been touted to only send minimal diffs, but how minimal? In this section, we’ll discuss this with the following questions in mind:

- ☐ How much payloads are we sending to the client? How does it look over time?

- ☐ How does it compare with other websites?

- ☐ Does it really matter?

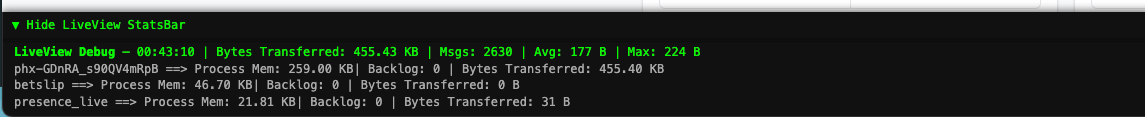

To address this, I created a StatsBar that hooks into Phoenix Socket to intercepts every incoming message and then report its size. It splits them into individual LiveViews in case there are multiple of them on the page, and also accepts custom metrics from the server. It sits at the bottom of the screen so it is very convenient and can be collapsed.

Live StatsBar

Live StatsBar From the above image, our LiveView sends less than 200bytes on average, and has sent a maximum of 224 bytes in 43 minutes. This is insanely small! Upon inspecting the WS tab in chrome devtool, I discovered that the payload size could be made even smaller in some cases. For example:

[

"4",

null,

"lv:phx-GDnUCgeme5u47iwF",

"diff",

{

"c": {

"8": {

"3": {

"1": "US$0.72"

},

"6": {

"2": "US$0.72",

"4": " min=\"0.5784\"",

"5": " max=\"0.8676\"",

"6": " step=\"0.0029\"",

"7": " value=\"0.723\"",

"9": "US$0.72"

}

}

}

}

] We could actually move the min, max and step calculation to the client using a hook and shave about 72 bytes off the payload (from 201 down to 129). But I’m satisfied with this one and I think it’s quite convenient to do on the server.

I went ahead to inspect the WS panel for binance.com/en. The webpage lists 5 popular coins and their real time price and each update was around 50kbs on average. To be fair, they send price data for a lot more coins and perhaps they reuse a general-purpose API for everything but still, that’s a lot heavier than the LiveView version.

Does it really matter?

I’ll let you be the judge. A general rule of performance is: less is better. Less work for the CPU, less bandwidth, faster UX, and lower infra costs. And in many cases, bandwidth is your most expensive server bill.

But at what cost?

LiveView’s tiny diffs come at a price: memory. It needs to keep a copy of the rendered markup in memory so it can compute diffs later. From the StatsBar, my LiveView process used around ~260KB of memory, per user. At that rate, 100 concurrent users would burn about 260MB of RAM. Not crazy, but definitely something to keep in mind.

For comparison: a React app would push all the state and diffing work to the browser, freeing up server memory almost entirely.

Still, I’m happy with the tradeoff. With LiveView, I get:

- No need for extra routes or controllers to fetch/update state

- No client-server mismatch bugs

- No need for duplicated authorization logic

- One source of truth, that I can test end to end

I’m pretty okay with that.

So to conclude:

- ✅ How much payload are we sending to the client? - Pretty tiny

- ✅ How does it compare with other websites? - LiveView is a clear winner

- ❓Does it really matter? - Honestly, up to you - but at the very least, it's nice to have.

Lastly, a quick kamal deploy and the latest updates are live.